ADAS & Autonomous Driving

// Divine Sim Suite

Divine Technology has developed integrated autonomous driving simulation software to address safety concerns in autonomous driving. This software evaluates the performance and safety of autonomous driving sensors, algorithms, and systems.

We have implemented autonomous driving simulation technology to assess the coe technologies of autonomous vehicles quickly and safely.

This virtual simulation technology allows for the repeated testing of numerous risky situations to prepare for unexpected events on the road.

Divine Technology provides the most reliable results by applying engineering expertise to the sensor components, making it comparable to real-world conditions.

Through our proprietary high-reliability autonomous driving simulation platform, Divine Sim Suite, we offer a comprehensive solution encompassing compliance with regulations and laws, edge case scenarios, traffic flow simulation, thermal camera simulation, and high-reliability simulations.

// Features

// Evaluations

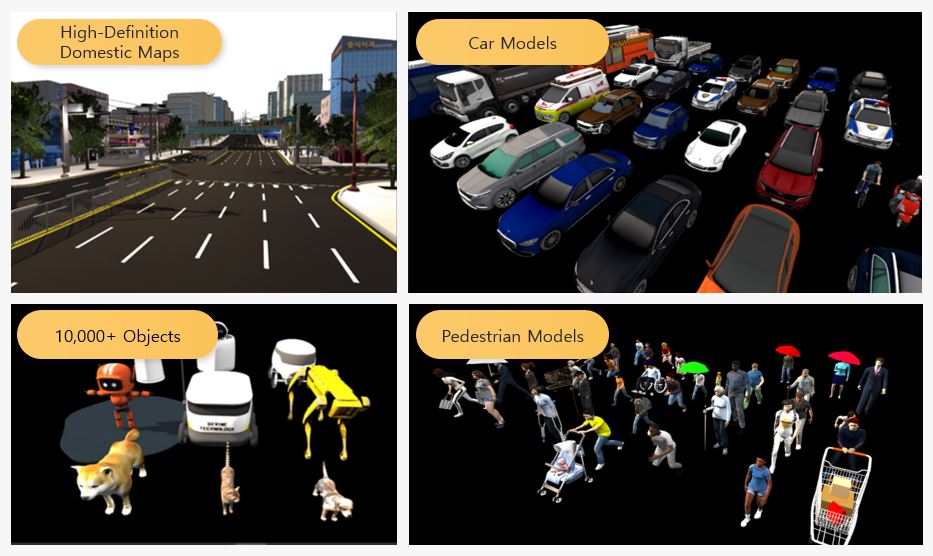

// 3D Object Library

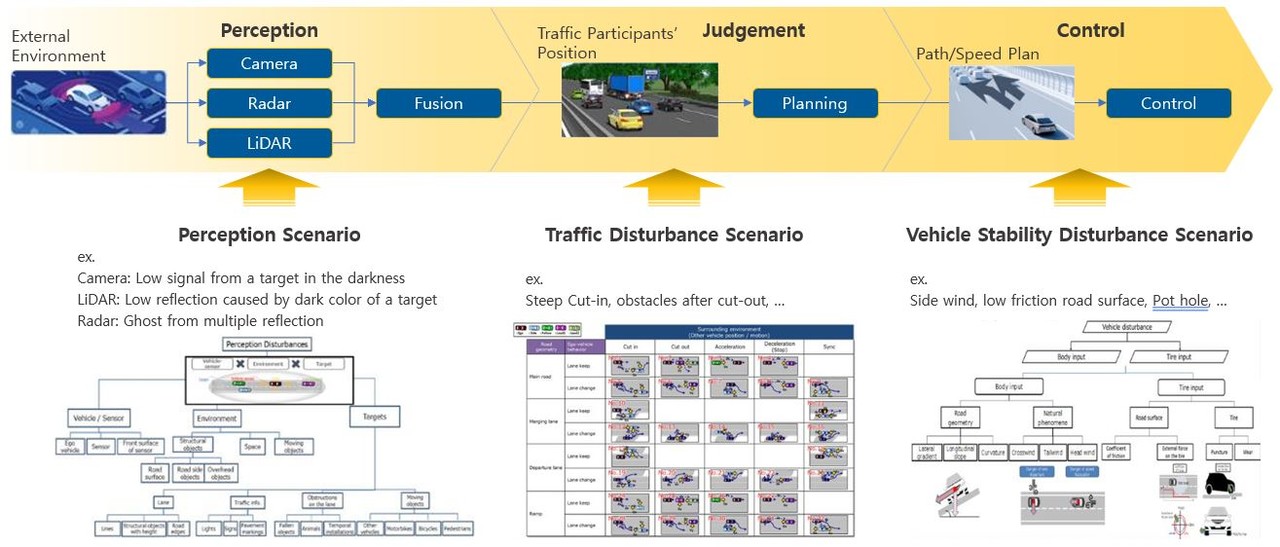

// Scenario

// Feasibility Study - Camera Sensor Simulation

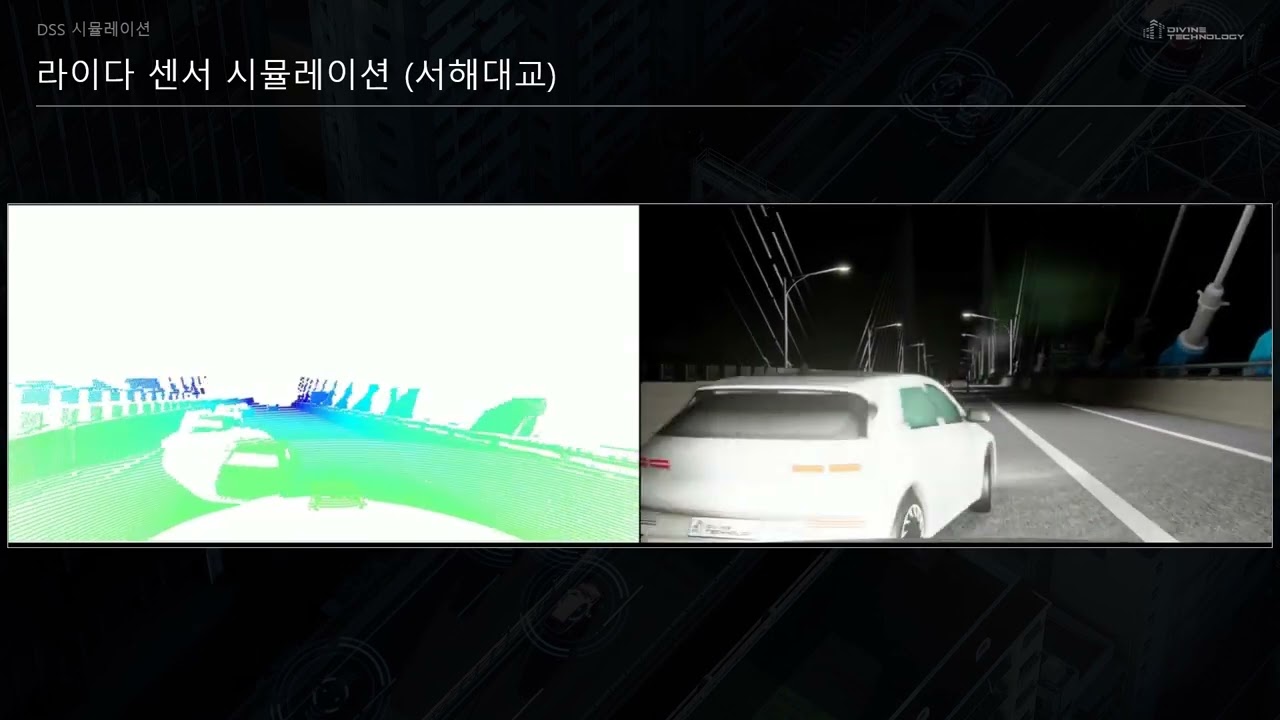

// Feasibility Study - LiDAR Sensor Simulation

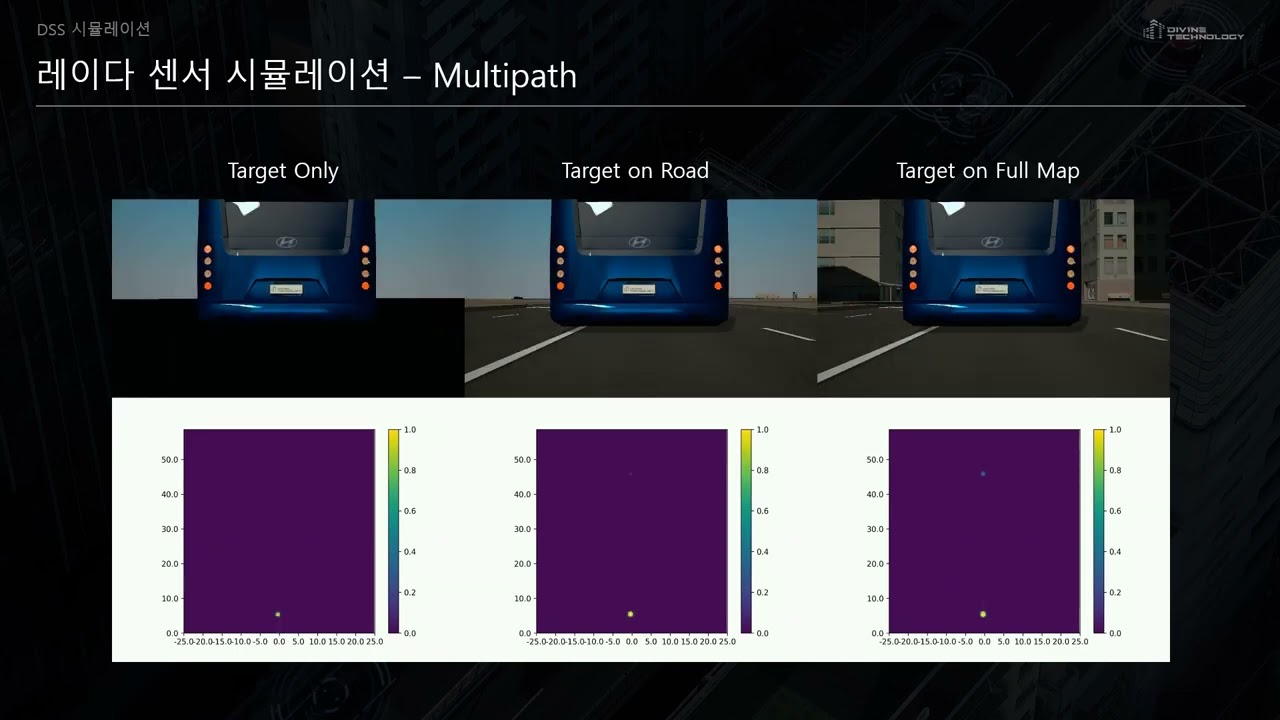

// Feasibility Study - Radar Sensor Simulation

// Feasibility Study - Autonomous Driving

We conducted autonomous driving tests on a custom-made Gangnam road under dark and rainy night conditions. In the virtual environment, all objects are divided into material-specific layers with defined physical properties. Light sources, such as streetlights, traffic lights, and headlights, have been assigned luminance values. The sensors mounted on the vehicle are modeled to operate like real-world sensors, ensuring physical-based sensor modeling.

For defining physical properties of objects and modeling physical-based sensors, Ansys AVxcelerate was used and integrated with the driving simulation platform CARLA. The sensor output data is fed into the autonomous driving platform Autoware for perception and decision-making, and subsequently controls the ego vehicle in CARLA.